1. Introduction

2. Optimizing Speech in Copilot Studio: Tips for Voice-First IVR Systems (this article)

3. Balancing Automation and Human Touch in Generative AI IVR

4. Multilingual IVR: Expanding Customer Support with AI

5. Building Custom AI Models for Personalized Voice Responses

6. Measuring Success: Tracking KPIs for Next-Gen IVR Systems

7. How to Secure Customer Data in AI-Based IVR Environments

8. Compliance and Security extended for Copilot Studio

Voice-enabled agents now revolutionize customer service in call centers, mobile apps, and messaging platforms. In my job as Solution Architect more and more I use Microsoft IVR systems to help businesses automate their customer interactions successfully.

Businesses can improve their self-service capabilities by a lot and reduce agent workload when they integrate voice-enabled copilots through customer service platforms. Modern IVR customer service systems rely on the latest speech IVR technology that has dual-tone multi-frequency input, silence detection, and barge-in control.

Let me show you how to optimize your Microsoft Copilot Studio IVR system completely. This piece covers everything you need to build the quickest voice response system that works with all 26 voice-optimized locales. You'll learn simple configuration steps and advanced speech recognition features.

Setting Up Microsoft IVR Basics

Microsoft Copilot Studio makes building voice-enabled IVR systems simple. Callers can use voice commands with keypad inputs to direct through menus hands-free.

The built-in Natural language processing (NLP) helps your IVR understand what callers want through normal conversation. Microsoft's NLP tools offer:

- Intent recognition - Identifies caller goals from spoken text

- Entity extraction - Captures key details like names and numbers

- Dialog management - Guides dynamic conversations

- Sentiment analysis - Detects emotion and frustration levels

Research shows 57% of customers would rather use touchtone keypads than voice recognition. Your IVR should offer both options. The system processes natural conversations and provides information to help users.

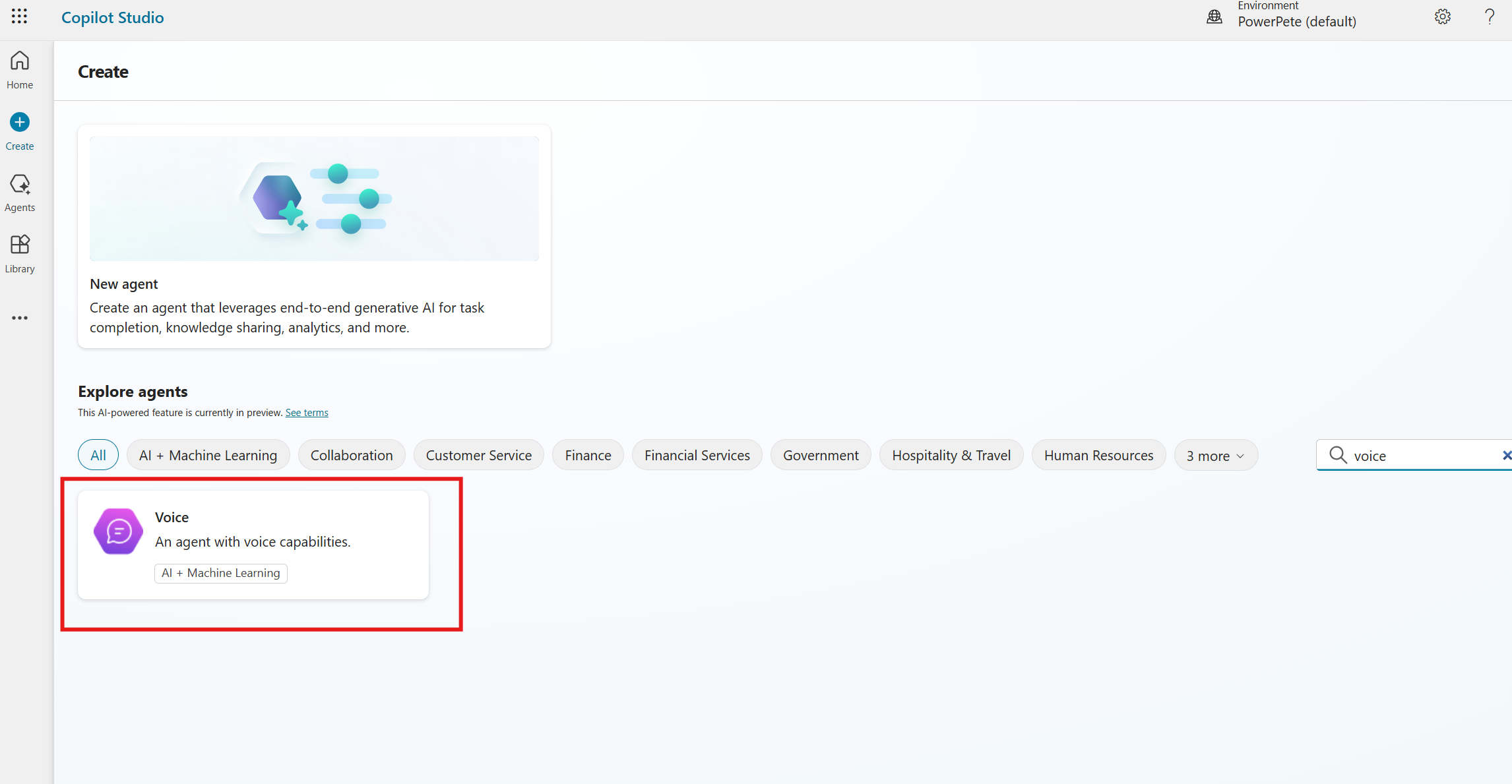

Choose your IVR template

The prebuilt Voice agent template in Copilot Studio forms the foundation you need to create self-service solutions that work. Here's how to start:

- Access Copilot Studio's Home or Create page

- Go to Explore agents section

- Select for and selecte the Voice option

The template has these built-in features:

- Natural language interactions

- Touch-tone menu navigation

- Call intent detection

- Account lookup functionality

- Entity validation with reprompting

The template sets up Speech & DTMF (Dual-Tone Multi-Frequency) as your default test mode automatically. You can verify voice features and barge-in capabilities during development with this setting.

Voice

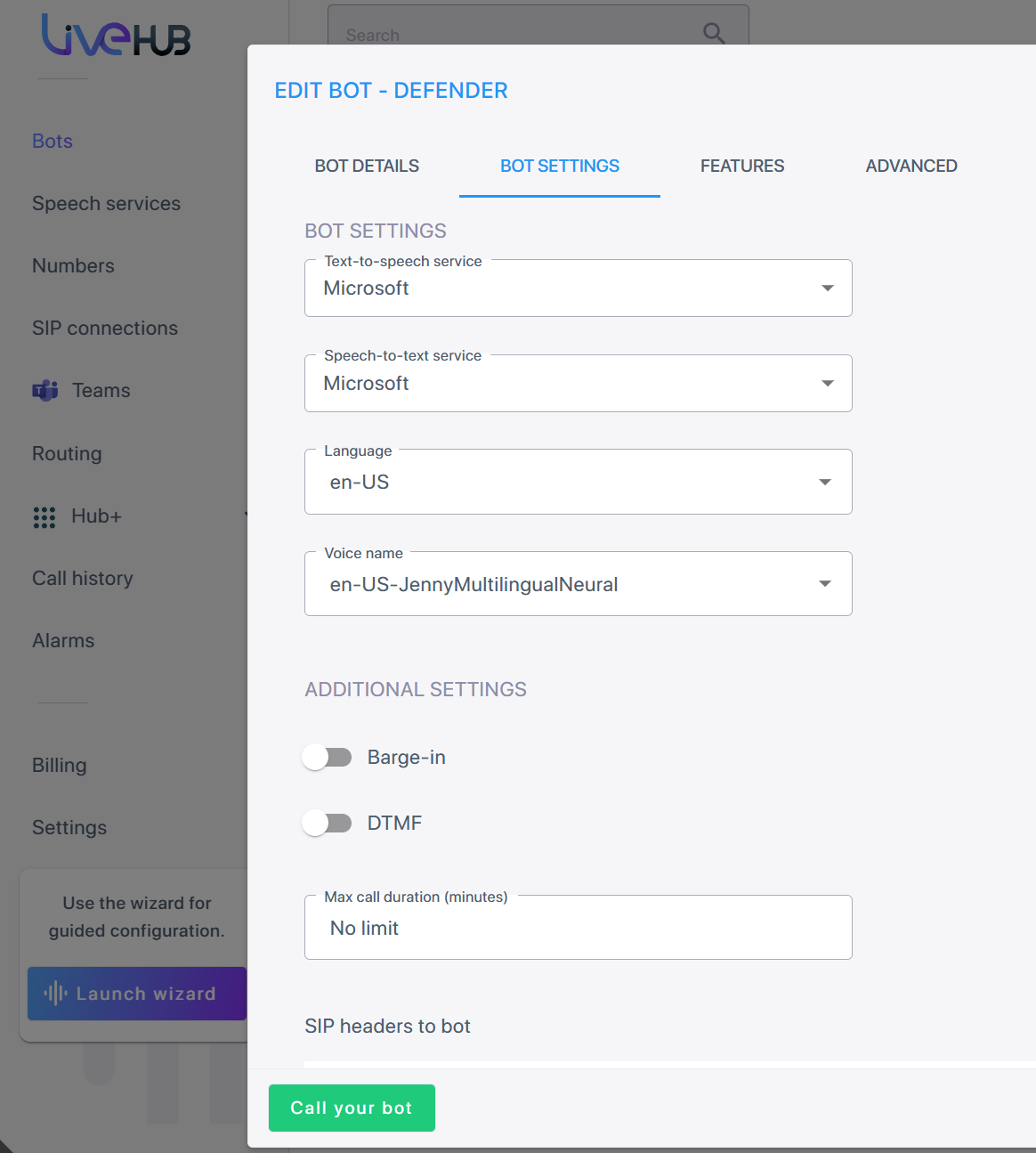

To actually test the bot through Voice you need to connect it to Dynamics or through Direct line (e.g. via Audio Codes Live Hub).

That is also the place where you would pick your voice and tailor it to the right style, speed and pitch.

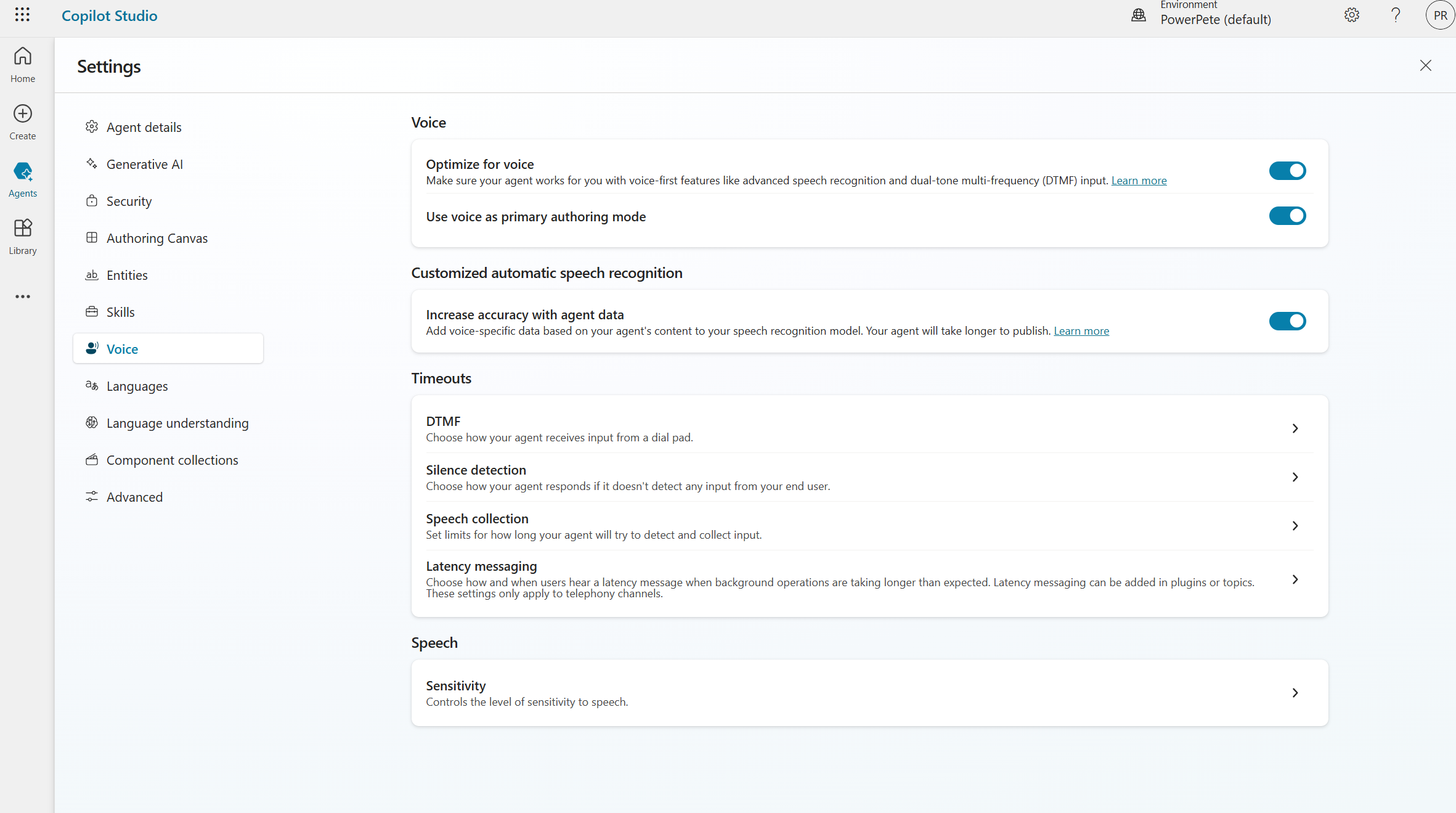

Basic settings first

To tailer your voice agent there are a few settings you can play around with. On the settings screen the main ones to change here are

Silence Detection

- Define how long to wait for user input (defaults are okay)

Speech Collection

- Define how long a user is allowed to speak / how long the bot will record the user input before it starts its next steps (defaults are okay)

DTMF

- Allowed time between digit press settings (defaults are okay)

There are a lot more DTMF settings on the properties of the question nodes inside of your IVR topics.

Speech Sensitivity

- How much background noise filtering do you want

Optimizing the conversation

After setting up the basic settings let's look at how we can make our conversations be way more fluent and resilient.

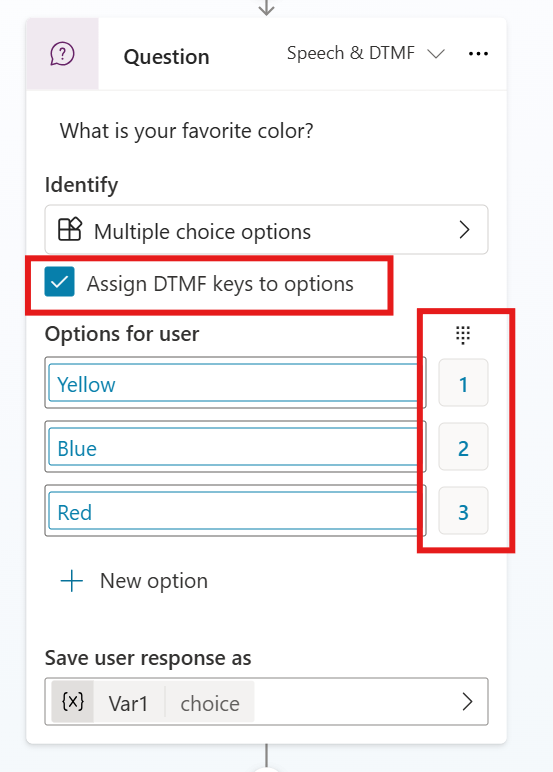

When setting up a question node in your topics there are a bunch of extra options after you turned on the Voice Capabilities of your agent.

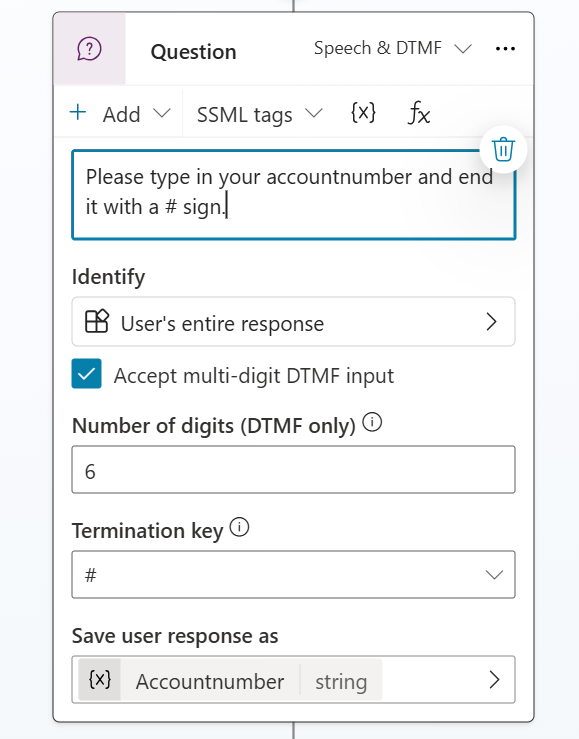

DTMF

Dual-tone multi-frequency (DTMF) input lets users interact through phone keypads. The system provides two DTMF input types:

Single-digit DTMF

- Maps individual keys to menu options

- Supports global commands triggered at any point

- Handles unknown dialpad press triggers

Multi-digit DTMF

- Accepts sequences for account numbers or codes

- Configurable digit length requirements

- Optional termination keys (* or #)

The essential parameters for optimal DTMF handling include:

- Interdigit timeout: Maximum wait time between key presses

- Termination timeout: Duration to wait for completion keys

- DTMF caching: Allows users to input keys without waiting for prompts

Control how interruptions work with barge-in

For long menu's you might want to give the user the option to barge-in and press a key or shout an option while the menu or question is still being read out. However in most cases you probably do not want that at all.

Luckily Microsoft allows you to tailer this per node. You can do this on message nodes and on question nodes under properties where you will find an option called "Allow Barge in".

Use SSML to customize audio

Speech Synthesis Markup Language (SSML) is a powerful tool in Copilot Studio that allows you to fine-tune the speech output of your voice-enabled agents. By using SSML tags, you can customize various aspects of the spoken text, including tone, language, pitch, and more. This guide will walk you through how to leverage SSML in Copilot Studio effectively.

Basic SSML Usage in Copilot Studio

To use SSML in Copilot Studio, follow these steps:

- Select a Message or Question node in your bot flow.

- Change the mode to Speech & DTMF.

- Use the SSML tags menu to select and insert tags, or manually enter them.

Customizing Speech with SSML

Changing Tone and Emphasis

To change the tone or add emphasis to specific words or phrases, use the <emphasis> tag:

Normal text <emphasis level="strong">emphasized text</emphasis> normal text again.

Levels can be "strong", "moderate", or "reduced".

Modifying Pitch, Rate, and Volume

Use the <prosody> tag to adjust pitch, speaking rate, and volume:

<prosody rate="slow" pitch="low" volume="loud">

This text will be spoken slowly, with a low pitch, and loudly.

</prosody>

- Rate options: x-slow, slow, medium, fast, x-fast

- Pitch options: x-low, low, medium, high, x-high

- Volume options: silent, x-soft, soft, medium, loud, x-loud

Changing Language

For multilingual bots, use the <lang> tag to switch languages mid-sentence:

This is in English. <lang xml:lang="es-ES">Esto está en español.</lang> Back to English.

Adding Pauses

Insert pauses using the <break> tag:

Let's pause for a moment<break time="2s"/> and continue.

Advanced SSML Techniques

Phonetic Pronunciation

Use the <phoneme> tag for precise pronunciation control:

<phoneme alphabet="ipa" ph="təˈmeɪtoʊ">tomato</phoneme>

Audio Insertion

Insert audio files using the <audio> tag:

<audio src="https://example.com/sound.wav"/>

Note: Ensure the audio file is accessible to the bot user.

Best Practices

- Test thoroughly: Always test your SSML-enhanced messages to ensure they sound natural and convey the intended meaning.

- Use sparingly: Over-use of SSML can make speech sound unnatural. Use it only when necessary for clarity or emphasis.

- Consider context: Adjust SSML usage based on the context of the conversation and the user's needs.

- Localization: When using SSML for multiple languages, ensure that the tags are appropriate for each language's characteristics.

By mastering these SSML techniques in Copilot Studio, you can create more engaging, natural-sounding voice interactions that enhance the user experience of your bot.

More advanced natural language processing

Microsoft gives you the option to switch from the built in Copilot CLU to a externally managed CLU where you can tailor it even further.

Your NLP will work better when you:

- Train models using diverse datasets

- Add new examples to cover emerging language patterns

- Monitor intent recognition accuracy

- Log calls to analyze failure points

- Listen to live interactions to catch issues

- Survey callers about pain points

Speech recognition and NLP together help your IVR handle thousands of complex customer requests. Remember to include an option for live agent transfer. The system passes all conversation details during escalation.

Test it!

The system lets bots handle voice calls up to one hour. Your voice channel also comes with transcription, recording, and custom automated messages to make customer experience better.

Make sure you test your setup completely before going live. The voice agent template works with both speech and keypad inputs, giving your customers the choice they prefer.

Conclusion

Microsoft IVR systems with Copilot Studio excel at automating customer service. Success depends on proper setup, smart menu design and consistent testing.

Speech recognition works alongside natural language processing and DTMF inputs to create a flexible system that adapts to user priorities. Your IVR handles complex customer interactions while you retain control through built-in monitoring tools.

The system's reliability depends heavily on testing. Quality checks for calls, performance tracking and customer feedback point out what needs improvement. Microsoft's analytics dashboard gives you up-to-the-minute data analysis to optimize your IVR system.

Clear menu structures, well-timed settings and full testing scenarios help build an IVR system that works. Your customers get better service while your agents handle less work.

Comments